(This is joint work with Kristal Curtis and Tim Kraska.)

The Internet is littered with stories of traditional relational database failing to meet the performance needs of fast growing internet sites. The story usually goes as follows: Everything works great when the site is small. Suddenly, the site becomes popular and queries start running slowly. As a result, the developers abandon the relational database and switch to writing queries imperatively against a distributed key/value store. Examples of sites that use distributed key/value stores under the covers include digg, facebook, and twitter, along with many others.

One key driver of this NoSQL movement is the fact that the data independence provided by an RDBMS actually exacerbates the scaling problem by hiding potentially expensive queries behind the relative simplicity of high-level declarative queries. In contrast, the simple get/put interface of most NoSQL stores provides predictable per-operation performance, independent of the size of the underlying database.

The NoSQL ‘solution,’ however, leads to its own set of problems. The use of imperative functions instead of declarative queries means that changes to the data model often require time-consuming rewrites. Additionally, developers have to manually parallelize key/value store requests or suffer delays due to sequential execution. In other words, the benefits of physical and logical data independence are lost.

Instead, we propose PIQL (pronounced pickle), the Performance-Insightful Query Language. In addition to the physical and logical data independence provided by a traditional system, PIQL ensures the scale independence of all queries in an application at compile time. A scale-independent query is guaranteed to perform only a bounded number of operations no matter how large the underlying database grows.

Some systems, for example Google AppEngine’s GQL, impose severe functional restrictions, such as removing joins, in order to ensure scalability. In contrast, PIQL employs language extensions, query compilation technology, and response-time estimation to provide scale independence over a larger and more powerful subset of SQL.

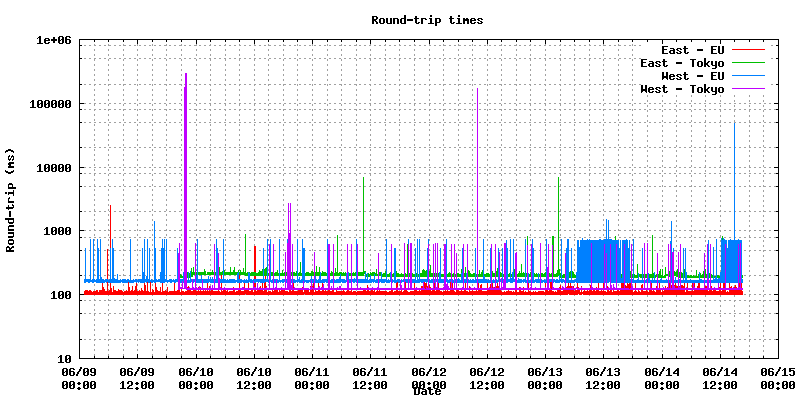

We evaluated our ideas by building a prototype on top of SCADS, a distributed key/value store first proposed at CIDR 2009. Using this prototype we constructed two benchmarks, one based on Twitter and the other on the user-facing queries from TPC-W.

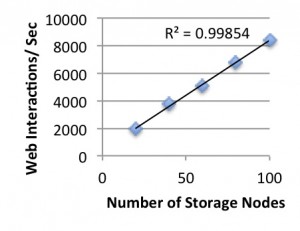

The throughput of the system while running the TPC-W benchmark increases linearly as machines are added to the cluster.

As the above figure shows, systems built using PIQL scale linearly as machines are added while keeping response time constant. If you’d like to learn more, you can check out our paper appearing in VLDB2012 or look at some of the queries written in our LINQ-like Scala DSL.