The 2nd AMPLab research retreat was held Jan 11-13, 2012 at a mostly snowless Lake Tahoe. 120 people from 21 companies, several other schools and labs, and of course UC Berkeley spent 2.5 days getting an update on the current state of research in the lab, discussing trends and challenges in Big Data analytics, and sharing ideas, opinions and advice. Unlike our first retreat, held last May, which was long on vision and inspiring guest speakers, the focus of this retreat was current research results and progress. Other than a few short overview/intro talks by faculty, virtually all of the talks (16 out of 17) were presented by students from the lab. Some of these talks discussed research that had been recently published, but most of them discussed work that was currently underway, or in some cases, just getting started.

The first set of talks was focused on Applications. Tim Hunter described how he and others used Spark to improve the scalability of the core traffic estimation algorithm used in the Mobile Millennium system, giving them the ability to run models faster than real-time and to scale to larger road networks. Alex Kantchelian presented some very cool results for algorithmically detecting spam in tweet streams. Matei Zaharia described a new algorithmic approach to Sequence Alignment called SNAP. SNAP rethinks sequence alignment to exploit the longer reads that are being produced by modern sequencing machines and shows 10x to 100x speed ups over the state of the art, as well as improvements in accuracy.

The second technical session was about the Data Management portion of the BDAS (Berkeley Data Analytics System) stack that we are building in the AMPLab. Newly-converted database professor Ion Stoica gave an overview of the components. And then there were short talks on SHARK (an implementation of the Hive SQL processor on Spark), Quicksilver – an approximate query answering system that is aimed at massive data, scale-independent view maintenance in PIQL (the Performance Insightful Query Language), and a streaming (i.e., very low-latency) implementation of Spark. These were presented by Reynold Xin, Sameer Agarwal, Michael Armbrust and Matei Zaharia, respectively. Undergrad Ankur Dave wrapped up the session by wowing the crowd with a live demo of the Spark Debugger that he built – showing how the system can be used to isolate faults in some pretty gnarly, parallel data flows.

The Algorithms and People parts of the AMP agenda were represented in the 3rd technical session. John Ducci presented his results on speeding up stochastic optimization for a host of applications. He developed a parallelized method for introducing random noise into the process that leads to faster convergence. Fabian Wauthier reprised his recent NIPS talk on detecting and correcting for Bias in crowdsourced input. Beth Trushkowsky talked about “Getting it all from the Crowd”, and showed how we must think differently about the meaning of queries in a hybrid machine/human database system such as CrowdDB.

A session on Machine-focused topics included talks by Ali Ghodsi on the PacMan caching approach for map-reduce style workloads, Patrick Wendell on early work on low-latency scheduling of parallel jobs, Mosharaf Chowdhury on fair sharing of network resources in large clusters, and Gene Pang on a new programming model and consistency protocol for applications that span multiple data centers.

A session on Machine-focused topics included talks by Ali Ghodsi on the PacMan caching approach for map-reduce style workloads, Patrick Wendell on early work on low-latency scheduling of parallel jobs, Mosharaf Chowdhury on fair sharing of network resources in large clusters, and Gene Pang on a new programming model and consistency protocol for applications that span multiple data centers.

The technical talks were rounded out by two presentations from students who worked with partner companies to get access to real workloads, logs and systems traces. Yanpei Chen talked about an analysis of the characteristics of various MapReduce loads from a number of sources. Ari Rabkin presented an analysis of trouble tickets from Cloudera.

As always, we got a lot of feedback from our Industrial attendees. A vigorous debate broke out about the extent to which the lab should work on producing a complete, industrial-strength analytics stack. Some felt we should aim to match the success of earlier high-impact projects coming out of Berkeley, such as BSD and Ingres. Others insisted that we focus on high-risk, further out research and leave the systems building to them. There were also discussions about challenge applications (such as the Genomics X Prize competition) and how to ensure that we achieve the high degree of integration among the Algorithms, Machines and People components of the work, which is the hallmark of our research agenda. Another topic of great interest to the Industrial attendees was around how to better facilitate interactions and internships with the always amazing and increasingly in-demand students in the lab.

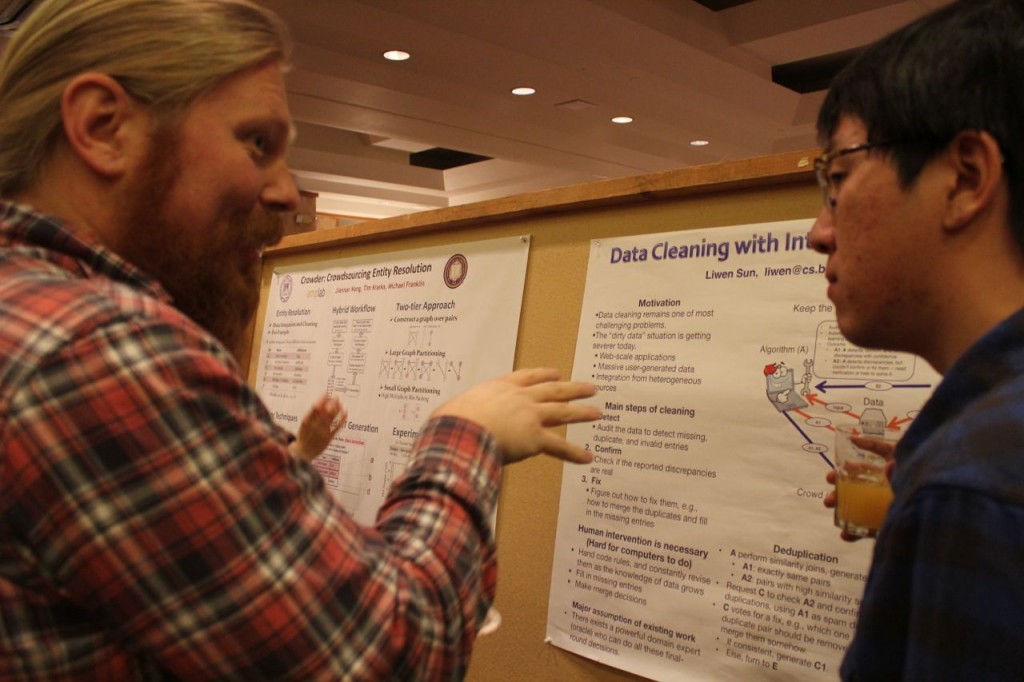

From a logistical point of view, we tried a few new things. The biggest change was with the poster session(s). As always, the cost of admission for students was to present a poster of their current research. This year, however, we also invited visitors to submit posters describing relevant work at their companies in general, and projects for which they were looking to hire interns in particular. We then partitioned the posters into two separate poster sessions (one each night), thereby giving everyone a chance to spend more time discussing the projects that they were most interested in while still getting a chance to survey the wide scope of work being presented. Feedback on both of these changes was overwhelmingly positive. So we’ll likely stick to this new format for future retreats.

From a logistical point of view, we tried a few new things. The biggest change was with the poster session(s). As always, the cost of admission for students was to present a poster of their current research. This year, however, we also invited visitors to submit posters describing relevant work at their companies in general, and projects for which they were looking to hire interns in particular. We then partitioned the posters into two separate poster sessions (one each night), thereby giving everyone a chance to spend more time discussing the projects that they were most interested in while still getting a chance to survey the wide scope of work being presented. Feedback on both of these changes was overwhelmingly positive. So we’ll likely stick to this new format for future retreats.

Kattt Atchley, Jon Kuroda and Sean McMahon did a flawless job of organizing the retreat. Thanks to them and all the presenters and attendees for making it a very successful event.