![]() Carat is a new research project in the AMP Lab that aims to detect energy bugs—app behavior that is consuming energy unnecessarily—using data collected from a community of mobile devices. Carat provides users with actions they can take to improve battery life (and the expected improvements).

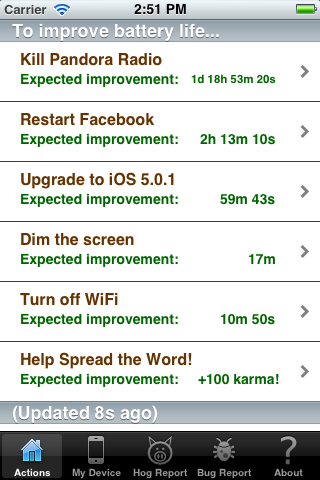

Carat is a new research project in the AMP Lab that aims to detect energy bugs—app behavior that is consuming energy unnecessarily—using data collected from a community of mobile devices. Carat provides users with actions they can take to improve battery life (and the expected improvements).

Carat collects usage data on devices (we care about privacy), aggregates these data in the cloud, performs a statistical analysis using Spark, and reports the results back to users. In addition to the Action List shown in the figure, the app empowers users to dive into the data, answering questions like How does my energy use compare to similar devices? and What specific information is being sent to the server?

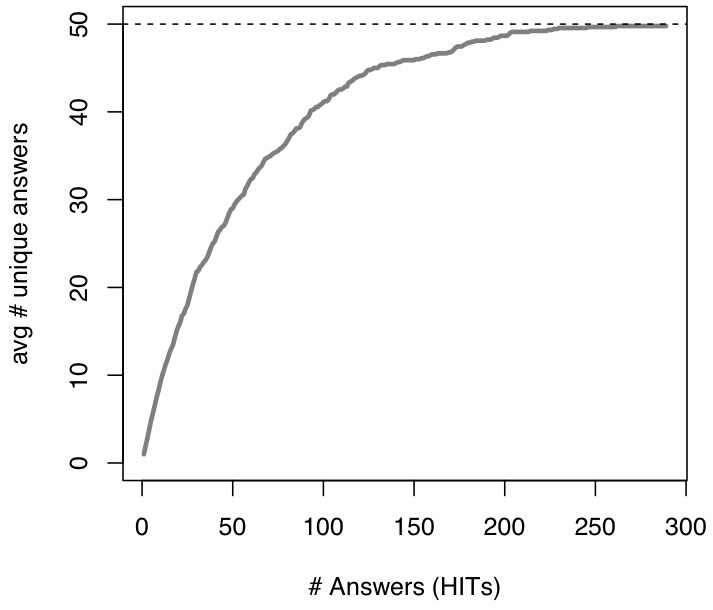

The key insight of our approach is that we can acquire implicit statistical specifications of what constitutes “normal” energy use under different circumstances. This idea of statistical debugging has been applied to correctness and performance bugs, but this is the first application to energy bugs. The project faces a number of interesting (and sometimes distinguishing) technical challenges, such as accounting for sampling bias, reasoning with noisy and incomplete information, and providing users with an experience that rewards them for participating.

We need your help testing our iOS implementation and gathering some initial data! If you have an iPhone or iPad with iOS 5.0 or later and are willing to give us a few minutes of your time, please click here.