Big Data is all the rage right now – articles in newspapers and the popular press, a steady stream of books about “Super-crunchers” and “Numerati”, conferences on Big Data, new and newly-acquired companies, and the appearance of a class of workers called Data Scientists are just some of the move visible manifestations of this trend.

Why all the excitement? First of all, the amount of data available to be analyzed continues to grow as more and more human activity moves on-line. Second, continued cost improvements in processing power and storage, advances in scalable software architectures, and the emergence of cloud computing are enabling organizations of all types to consider trying to work with this data. This combination of data and analytics capacity hold the promise to enable data-driven decision making – a holy grail of information technology from its earliest days.

Much of the industry and research activity around Big Data is focused on scalability to address the increasing volume of data. But data size is a problem for which there are known solutions. The real challenge problems in data analytics come from other causes. Some of the hardest problems stem from data heterogeneity. As more and more information is collected from more and more places, making sense of that information in a unified way becomes increasingly difficult.

Another source of problems comes not from the data but from the queries or questions asked over that data. Queries are often exploratory in nature and may be under- or over- specified. Furthermore, as one looks for more and more needles in bigger and bigger haystacks, it becomes possible to find evidence to support all sorts of hypotheses, even if that evidence is the result of randomness rather than actual causality. Finally, as we cast a wider net for both data and queries, we must deal with increasing ambiguity and must support decision making and predictions over incomplete, uncertain data.

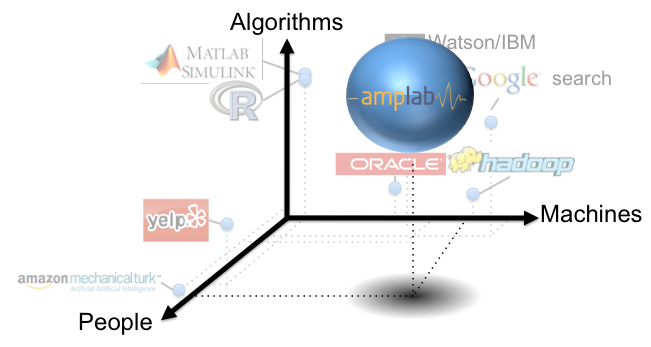

Addressing these problems requires more than simply scaling up existing systems and data analysis algorithms. Instead, what is needed is a rethinking about how analytics platforms are built. Stepping back a bit, the key resources we have for making sense of data are Algorithms, in the form of statistical machine learning and other data analytics methods; Machines, in the form of cluster and cloud computing, and People, who not only provide data and pose questions, but who can also help out with understanding the data and can address the “ML-Hard” questions that are not currently solvable by computers alone.

Most existing data-centric systems emphasize one of the three key resources. The premise behind the AMPLab is that a more holistic approach is needed. In other words, we want to attack the Big Data analytics problem by developing systems that more closely blend the resources of Algorithms, Machines, and People.

Most existing data-centric systems emphasize one of the three key resources. The premise behind the AMPLab is that a more holistic approach is needed. In other words, we want to attack the Big Data analytics problem by developing systems that more closely blend the resources of Algorithms, Machines, and People.

One way to think of this is as a “co-design” approach. Rather than inventing new algorithms or designing new computing platforms in isolation, we are considering all of these resources together. For example, the CrowdDB system invokes crowdsourcing to find missing database data, perform entity resolution, and to perform subjective comparisons. CrowdDB is a combination of M (a traditional relational database system) and P (human input, obtained through Amazon Mechanical Turk and our own mobile platform) with a bit of A thrown in to do data cleaning and quality assessment. As another example, recent progress by our machine learning group on parallelizing the Bootstrap method for confidence estimation is an instance of designing a new Algorithmic approach to cope with the emerging Machine environment of cluster computing.

The challenge of this type of integrated work is to get people whose expertise typically lies along one of the A, M, and P dimensions to be able to effectively work together to solve big problems. We believe that the AMPLab is uniquely positioned to enable this collaboration, and as such, we look forward to contributing to the progress in making sense of Big Data.