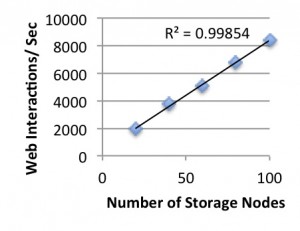

A typical page load requires sourcing and combining many pieces of data. For example, a frontend application like a newsfeed requires making many storage requests to fetch your name, your photo, your friends and their photos, and your friends’ most recent posts. Since loading a page requires making many of these storage requests, controlling storage request latency is crucial to reducing overall page load times.

Storage systems are thus provisioned and tuned to meet these latency requirements. However, this requires provisioning for peak, not average, load. This means that the hardware is often underutilized. MapReduce batch analytics jobs are perfect for taking up this excess slack capacity in the system. However, traditional storage systems are unable to support both a MapReduce and frontend workload without adversely affecting frontend latency. This is compounded by the dynamic, time-variant nature of a frontend workload, which makes it difficult to tune the storage system for a single set of conditions.

This is what motivated our work on Frosting. Frosting is a request scheduling layer on top of HBase, a distributed column-store, which dynamically tunes its internal scheduling to meet the requirements of the current workload. Application programmers directly specify high-level performance requirements to Frosting in the form of service-level objectives (SLOs), which are throughput or latency requirements on operations to HBase. Frosting then carefully admits requests to HBase such that these SLOs are met.

In the case of combining a high-priority, latency-sensitive frontend workload and a low-priority, throughput-oriented MapReduce workload, Frosting will continually monitor the frontend’s empirical latency and only admit requests from MapReduce when the frontend’s SLO is satisfied. For instance, if the frontend is easily meeting its latency target, Frosting might choose to admit more MapReduce requests since there is slack capacity in the system. If the frontend latency increases above its SLO due to increased load, Frosting will accordingly admit fewer MapReduce requests.

This is ongoing work with Shivaram Venkataraman (shivaram@eecs) and Sara Alspaugh (alspaugh@eecs). No paper is yet available publicly. However, we’d love to talk to you if you’re interested in Frosting, especially if you use HBase in production, or have workload traces that we could get access to.

A session on Machine-focused topics included talks by Ali Ghodsi on the PacMan caching approach for map-reduce style workloads, Patrick Wendell on early work on low-latency scheduling of parallel jobs, Mosharaf Chowdhury on fair sharing of network resources in large clusters, and Gene Pang on a new programming model and consistency protocol for applications that span multiple data centers.

A session on Machine-focused topics included talks by Ali Ghodsi on the PacMan caching approach for map-reduce style workloads, Patrick Wendell on early work on low-latency scheduling of parallel jobs, Mosharaf Chowdhury on fair sharing of network resources in large clusters, and Gene Pang on a new programming model and consistency protocol for applications that span multiple data centers.