Matlab is a great language for prototyping ideas. It comes with many libraries specially for machine learning and statistics. But when it comes to processing the natural language Matlab is extremely slow. Because of this, many researchers use other languages to pre-process the text, convert the text to numerical data and then bring the resulting data to Matlab for more analysis.

I used to use Java for this. I would usually tokenize the text with Java, then save the resulting matrices to the disk and read them in Matlab. After a while this procedure became cumbersome. I had to go back and forth between Java and Matlab, the procedure is prone to human errors and the codebase just looks ugly.

Recently, together with Jason Chen, we have started to put together an NLP toolbox for Matlab. It is still a work in progress and we are still developing the toolbox but you can download the latest version from our github repository [link]. There is also an installation guide that helps you properly install it on your machine. I have built a simple map-reduce tool that allows you to utilize all of cores on the CPU for many functions.

So far the toolbox has modules for text tokenization (Bernoulli, Multinomial, tf-idf, n-gram tools), text preprocessing (stop word removal, text cleaning, stemming) and some learning algorithms (linear regression, decision trees, support vector machines and a Naïve Baye’s classifier). we have also implemented evaluation metrics (precession, recall, F1-score and MSE). The support vector machine tool is a wrapper around the famous LibSVM and we are working on another wrapper for SVM-light. A part-of-speech tagger is coming very soon too.

I have been focusing on getting different parts running efficiently. For example, the tokenizer uses Matlab’s native hashmap data structure (container maps) to efficiently pass over the corpus and tokenize it.

We are also adding examples and demos for this toolbox. The first example is a sentiment analysis tool that uses this library to predict whether a movie review is positive or negative. The code reaches the F1 score of 0.83, meaning that out of 200 movie reviews it made a mistake in classifying only 26 of them.

Please try the toolbox and note that it is still a work in progress, some functions are still slow and we are working to improve them. I would love to hear what you think. If you want us to implement something that might be useful to you just let us know.

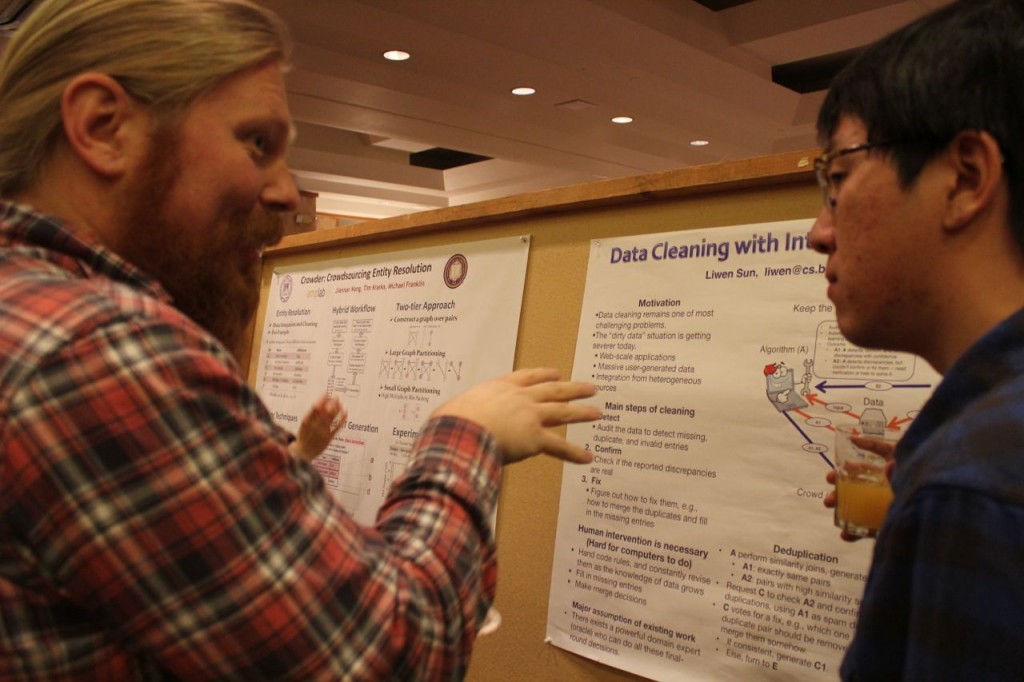

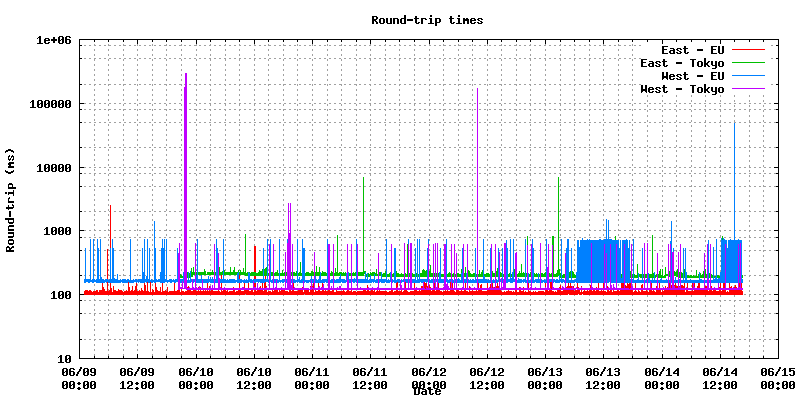

A session on Machine-focused topics included talks by Ali Ghodsi on the PacMan caching approach for map-reduce style workloads, Patrick Wendell on early work on low-latency scheduling of parallel jobs, Mosharaf Chowdhury on fair sharing of network resources in large clusters, and Gene Pang on a new programming model and consistency protocol for applications that span multiple data centers.

A session on Machine-focused topics included talks by Ali Ghodsi on the PacMan caching approach for map-reduce style workloads, Patrick Wendell on early work on low-latency scheduling of parallel jobs, Mosharaf Chowdhury on fair sharing of network resources in large clusters, and Gene Pang on a new programming model and consistency protocol for applications that span multiple data centers.