Crowdsourcing labor markets like Amazon Mechanical Turk are now being used by several companies. For example castingwords uses Mechanical Turk for transcribing audio and video files. Card Munch uses the crowd to insert the contents of business cards into iPhone address books.

But how long will it take for Card Munch to convert a batch of 200 cards into a digital address book, or how long should castingwords wait to get back the transcription of 100 audio files from Mechanical Turk?

This is a stochastic process. First, it depends on how many workers (a.k.a Turkers) are available on Mechanical Turk. Secondly, it depends on what other competing works are available on this market. Nobody wants to transcribe a long audio file for $6 if they can work on easier $6 tasks. In other words everybody has some kind of a utility function in mind that they like to maximize. Some want to earn more money in a shorter time; others may want to work on interesting jobs. Regardless of the form of this utility function it is less likely that workers will work on your task if there are more appealing tasks on the market. That’s what we are as humans, selfish utility maximizers!

An interesting property of the Mechanical Turk market is that workers select their tasks from two main queues: “Most recently posted” and “Most HITs available”. This results in an interesting behavior in terms of completion times for a task: They are distributed according to a power-law: While many tasks get completed relatively quickly, some others starve. When you post your task with 100 subtasks, say 100 business cards that need to be digitized, the task appears on top of the “new tasks” list, more people notice that and more people work on it. You may get 70 of your subtasks completed in few minutes. But as other requesters post their tasks on the market, your task goes down in the new tasks list and fewer people see it. The completion rate goes down dramatically. By the time your task drops to the fifth page of the list you will be lucky if people notice your task at all. This is why many consider canceling their task at this point and repost them just to appear on the first page of task lists.

When we see such priority queues (in this case the list of “new tasks”) we see similar behaviors of task completion times. In fact we all have an intuitive understanding of the effect of the priority queue. We click on the top link on the first page of Google search results more often that a link that appears on the 10th page. See Barabasi’s article in Nature on this phenomenon (also see the supplementary material on this website in case you are interested in the underlying queuing theory).

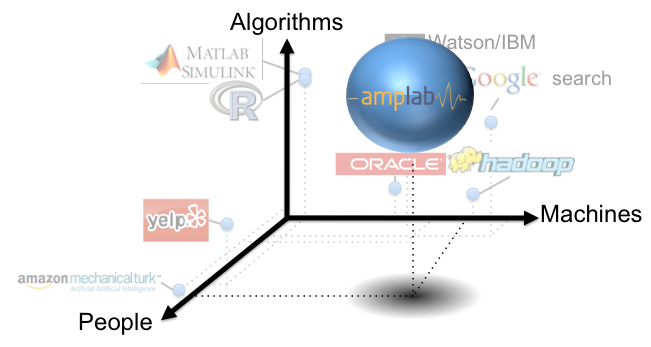

Human behavior is hard to predict and to answer our completion time question we need to unlock this fascinating long tail complexity. This is an ongoing research project in the community. And so far I have approached this problem from two different perspectives: One, using a statistical technique called survival analysis (specifically, the Cox proportional hazards model), I’ve also explored the idea of Turkers as utility maximizes. Interested readers can refer to this paper. I’d like to highlight that this work is still at the early stages, and I will write more about the extensions of these works in future blog posts.