What does a query result mean when the data comes from the crowd? This is one of the fundamental questions raised by CrowdDB, a hybrid human/machine database system developed here in the AMP lab. For example, consider what could be thought of as the simplest query: SELECT * FROM table. If tuples are being provided by the crowd, how do you know when the query is complete? Can you really get them all?

In traditional database systems, query processing is based on the closed-world assumption: all data relevant to a query is assumed to reside in the database. When the data is crowdsourced, we find that this assumption no longer applies; existing information could be extended by further by asking the crowd. However, in our current work we show that it is possible to understand query results in the “open-world” by reasoning about query completeness and the cost-benefit tradeoff of acquiring more data.

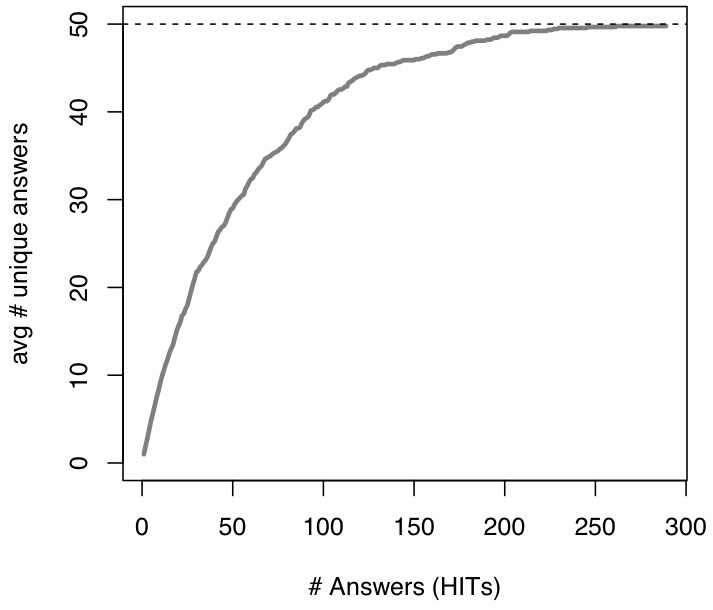

Consider asking workers on a crowdsourcing platform (e.g., Amazon Mechanical Turk) to provide items in a set (one at a time). As you can imagine, answers arriving from the crowd follow a pattern of diminishing returns: initially there is a high rate of arrival for previously unseen answers, but as the query progresses the arrival rate of new answers begins to taper off. The figure below shows an example of this curve when we asked workers to provide names of the US States.

Number of unique answers seen vs. total number of answers in the US States experiment (average)

This behavior is well-known in fields such as biology and statistics, where this type of figure is known as the Species Accumulation Curve (SAC). This analysis is part of the species estimation problem; the goal is to estimate the number of distinct species using observations of species in the locale of interest. We apply these techniques in the new context of crowdsourced queries by drawing an analogy between observed species and worker answers from the crowd. It turned out that the estimation algorithms sometimes fail due to crowd-specific behaviors like some workers providing many more answers than others (“streakers vs. samplers”). We address this by designing a heuristic that reduces the impact of overzealous workers. We also demonstrate a heuristic to detect when workers are consulting the same list on the web, helpful if the system wants to switch to another data gathering regime like webpage scraping.

Species estimation techniques provide a way to reason about query results, despite being in the open world. For queries with a bounded result size, we can form a progress estimate as answers arrive by predicting the cardinality of the result set. Of course, some sets are very large or contain items that few workers would think of or find (the long tail), so it does not make sense to try to predict set size. For these cases, we propose a pay-as-you-go-approach to directly consider the benefit of asking the crowd for more answers.

For more details, please check out the paper.