Many of today’s data-intensive applications at scale require always-on operation and low-latency query execution, which has led to quite a shake-up in the data management space. While the battle between NoSQL, NewSQL, and “old SQL” involves many factors including market forces and data models, one factor is fundamental: some semantics are fundamentally at odds with the goals of always-on operation and low latency execution. Since at least 1985, the database community has recognized that the gold standard of ACID database transactions—serializability, providing single-system programmability—is unachievable with these goals: a system facing network partitions between servers cannot provide both serializability and guarantee a response from every server (what we’ll call “high availability”). In the absence of partitions, this high availability corresponds to the ability to provide low-latency operation, especially across datacenters. Even the latest and greatest production-ready serializable databases still top out at roughly 20 writes/record/second due to fundamental coordination bottlenecks.

ACID in the Real World

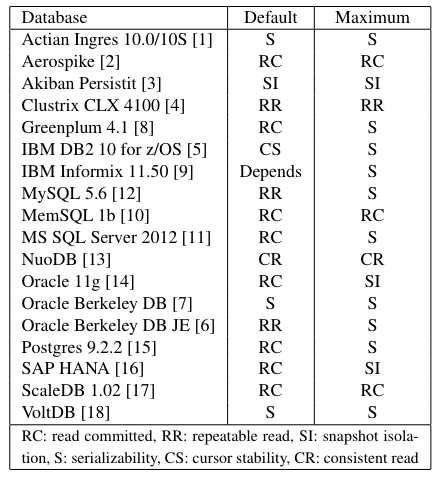

In recent work in the AMPLab and at Berkeley, we asked the question: are all transactional models incompatible with the goal of high availability? With the help of Alan Fekete, a database isolation whiz visiting us from the University of Sydney, we looked at transactional models in the real world and were surprised to find that, in fact, many popular databases didn’t even offer serializability as an option to end-users at all! In most cases, databases offered “weaker” models like Read Committed and Snapshot Isolation by default (sometimes labeling these models as “serializable”!). There is good reason for this phenomenon: these levels offer increased concurrency and often fewer system-induced aborts due to deadlocks and contention. However, these isolation models also expose a range of concurrency anomalies to end-users. Take a look at the summary in the chart below:

Enter Highly Available Transactions

Given that many real-world transactions aren’t serializable, we set out to understand which are achievable with high availability (i.e, as Highly Available Transactions, or HATs). To answer this question, we had to distinguish between unavailable implementations and unavailable semantics: for example, a lock-based implementation of Read Committed is unavailable under partial failures, but the accepted definition of Read Committed is achievable if we design concurrency control with availability as a top priority (e.g., for Read Committed, a database can buffer client writes until commit). Surprisingly, we found that many useful models like ANSI Repeatable Read and atomic multi-item writes and reads are also achievable with high availability (see Figure 2 of our paper) for more details. We also considered a modified but important availability model where users maintain affinity with a set of servers–we prove that this “sticky availability” is required for common guarantees like “Read Your Writes” and the increasingly popular Causal Consistency guarantees.

To determine the benefit of achieving high availability, we both analyzed the fundamental costs of coordination and benchmarked a prototype HAT implementation. The fundamental cost of giving up availability is that each operation requires at least one round-trip time to a coordination service (e.g., a lock manager or optimistic concurrency control validation service) to safely complete. In our measurements on EC2, this equated to a 10-100x increase in latency compared to HAT datacenter-local traffic. While our goal was not to design optimal implementations of HAT semantics, we were able to measure the overhead of a simple HAT implementation of Read Committed and atomic reads and writes (“Monotonic Atomic View”). A HAT system is able to scale linearly because replicas can serve requests without coordination: in our deployments, the HAT system was able to utilize all available replicas instead of a single replica for each data item (e.g., with two replicas, the HAT system achieved 2x the throughput).

A detailed report of our findings is available online, and we will present our results at VLDB 2014 in China next year.

Takeaways and a View of the Future

Given that transactional functionality is often thought to be “too expensive” for scalable data stores, it’s perhaps surprising that so many of the properties offered by real-world transactional data stores are actually achievable with availability and therefore horizontal scalability. Indeed, unavailable implementations may surface isolation anomalies less frequently than their HAT counterparts (i.e., in expectation, they may provide more intuitive behavior), but the worst-case behavior is the same. An isolation model like Read Committed is difficult to program and is, in many ways, no easier to program against than, say, eventual consistency. However, many of the techniques for programming Read Committed databases (e.g., “SELECT FOR UPDATE”) are also applicable to and have parallels to NoSQL stores.

What’s next for HAT databases? As we discuss in the paper, we think many applications can get away with HAT semantics, but, in some cases, coordination will be required. Understanding this trade-off will be key to programmability of future “coordination-free” data stores–after all, users ultimately care about consistency of their application, not necessarily the specific form of isolation or low-level, read/write data consistency offered by their data stores. Based on early results, we are bullish that even traditional OLTP applications can benefit from the techniques we’ve developed, from cheaper secondary indexing and foreign key constraints to full-blown transactional applications applying coordination only when it is (provably) required. Stay tuned.